Hort-YOLO:A multi-crop deep learning model with an integrated semi-automated annotation framework

A research group led by Associate Professor Islam MD Parvez and Professor Kenji Hato of the Graduate School of Agriculture at Ehime University has developed "Hort-YOLO," a real-time monitoring system for horticultural crops. This system is an advanced object detection network focused on accurate and context-aware analysis of crops, and simultaneously achieves a balance between annotation speed and scalability of supervised learning, making it practical for deployment in real-world environments.

This study addresses the significant challenge of accurate object detection in highly variable lighting conditions(ambient and artificial). We introduce a novel architecture, Hort-YOLO, which features a custom backbone, DeepD424v1, and a redesigned YOLOv4 head. The DeepD424v1 backbone is built on a modular, asymmetric structure that effectively extracts discriminative multi-scale global–local spatial features. This design fuses features at different depths to prevent the loss of feature perception while simultaneously enhancing recognition speed and accuracy. The network’s asymmetric branches, with multi-scale and parallel down sampling layers, gradually reduce the spatial size of feature maps. This process extracts fine-to-coarse details with richer feature information and generates diverse contextual information in both spatial and channel dimensions. This design effectively reduces computational complexity and enhances the representation learning capabilities of the convolutional neural network(CNN). The model size is approximately 2.6 × 102 MB. The improved Spatial Pyramid Pooling Module(SPPM)of the Hort-YOLO detector can accurately locate the target object even its pixel size is less than 5 % of the input image. A comparative performance evaluation was conducted on a class imbalanced, dynamic, and noisy horticultural dataset. Despite the presence of a low and moderate level of class imbalance, Models 1, 2, and 4 achieved a higher F1 score of 0.68 on the validation dataset. In comparison with other object detectors, including YOLOv10(n, s, m, l, x, b), YOLOv11(n, s, m, l, x), YOLOv12(n, s, m, l, x), YOLOx(medium coco), and both standard and modified YOLOv4, Hort-YOLO achieved a mAP05 and recall score of score 0.77 and 0.80, respectively. This study also demonstrates the efficiency of a semi-automatic annotation process, which reduces annotation time by 5 to 6 times. This annotation framework will help to scale up the supervised learning process by efficiently processing large datasets. Hort-YOLO also demonstrates the model’s robustness under different lighting, occlusion, and background complexity conditions, detecting objects at a range of 15 to 30 frames per second(FPS)in a real-world scenario.

Reference URL: https://doi.org/10.1016/j.compag.2025.111196

Bibliographic Information

Title:Hort-YOLO: A multi-crop deep learning model with an integrated semi-automated annotation framework

Authors:M.P. Islam, K. Hatou, K. Shinagawa, S. Kondo, Y. Kadoya, M. Aono, T. Kawara, K. Matsuoka

Journal:Computers and Electronics in Agriculture, 240, 111196

DOI:10.1016/j.compag.2025.111196, 2026(Available online 15 November 2025).

Fundings

- Japan Society for the Promotion of Science (24 K09164)

Media

-

Multi-crop deep learning model integrated with an AI-assisted semi-automatic annotation framework

Network-centric Hort-YOLO models vs cultivation goals

credit : Md Parvez Islam(Ehime University)

Usage Restriction : Please get copyright permission -

Multi-crop deep learning model integrated with an AI-assisted semi-automatic annotation framework

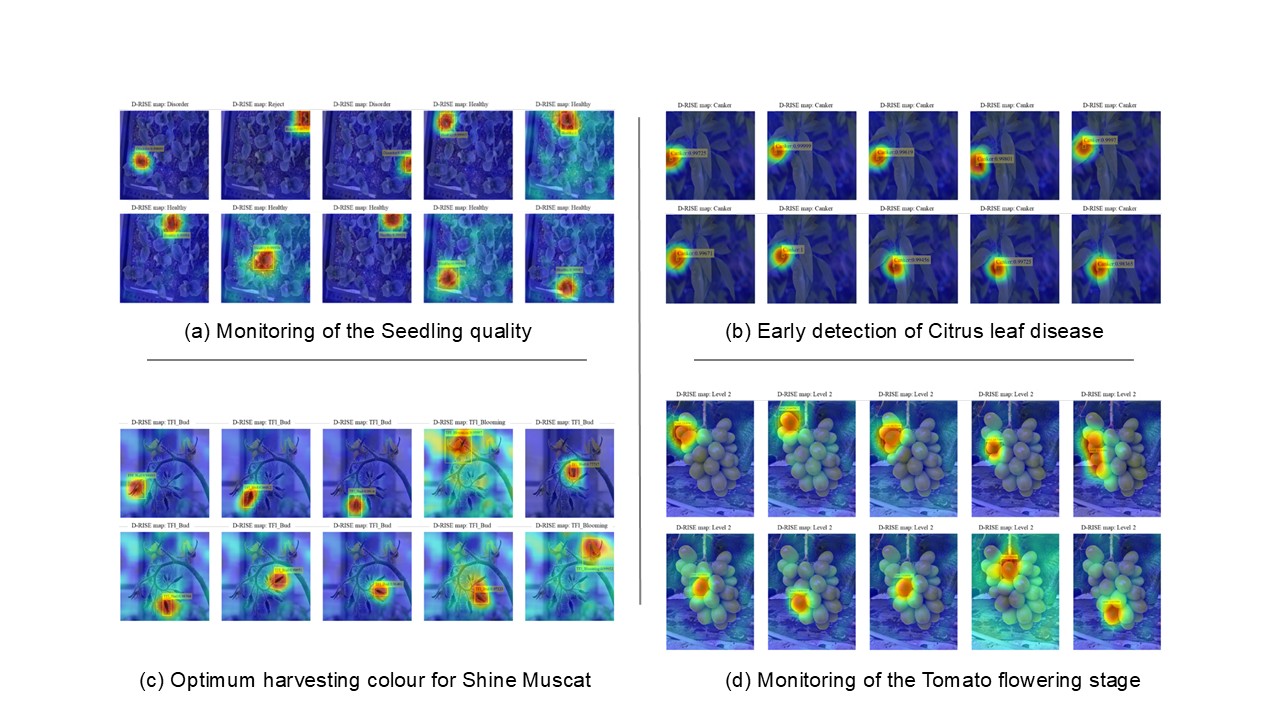

Saliency maps for assessing the efficiency of semi-automated data annotation tasks

credit : Md Parvez Islam(Ehime University)

Usage Restriction : Please get copyright permission

Contact Person

Name : Md Parvez Islam

Phone : +81-89-946-9823

E-mail : islam.md_parvez.by@ehime-u.ac.jp

Affiliation : Department of Biomechanical Systems, Faculty of Agriculture, Ehime University